Artificial neural networks

Artificial neural networks (ANNs) are powerful tools in the machine learning toolkit, used in a variety of ways to accomplish objectives such as image recognition, natural language processing, and game playing. ANNs learn in a similar way to other machine learning algorithms: by using training data. They are best suited to unstructured data where it’s difficult to understand how features relate to one another. This chapter covers the inspiration of ANNs; it also shows how the algorithm works and how ANNs are designed to solve different problems.

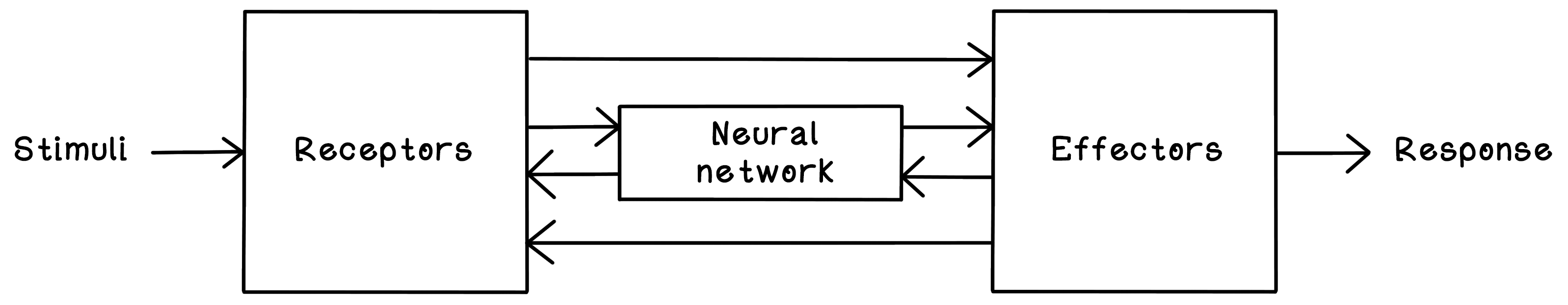

Neural networks consist of interconnected neurons that pass information by using electrical and chemical signals. Neurons pass information to other neurons and adjust that information to accomplish a specific function. When you grab a cup and take a sip of water, millions of neurons process the intention of what you want to do, the physical action to accomplish it, and the feedback to determine whether you were successful. Think about little children learning to drink from a cup. They usually start out poorly, dropping the cup a lot. Then they learn to grab it with two hands. Gradually, they learn to grab the cup with a single hand and take a sip without any problems. This process takes months. What’s happening is that their brains and nervous systems are learning through practice or training. In our bodies, we have billions of neurons that are harnessed to learn from the signals of what we are doing, towards what goal, while determining our level of success.

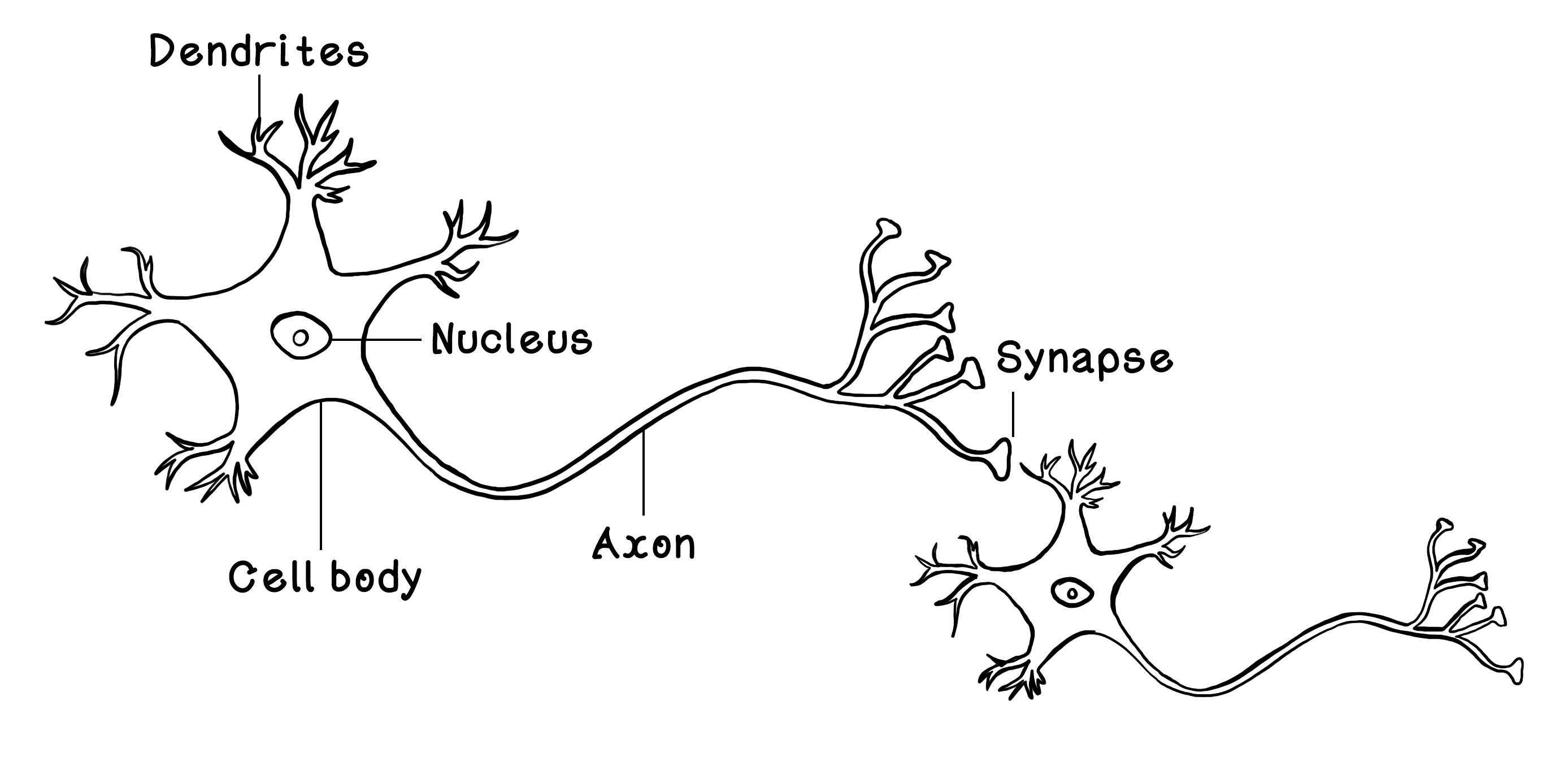

Simplified, a neuron (figure 9.4) consists of dendrites that receive signals from other neurons; a cell body and a nucleus that activates and adjusts the signal; an axon that passes the signal to other neurons; and synapses that carry, and in the process adjust, the signal before it is passed to the next neuron’s dendrites. Through approximately 90 billion of these neurons working together, our brains can function at the high level of intelligence that we know.

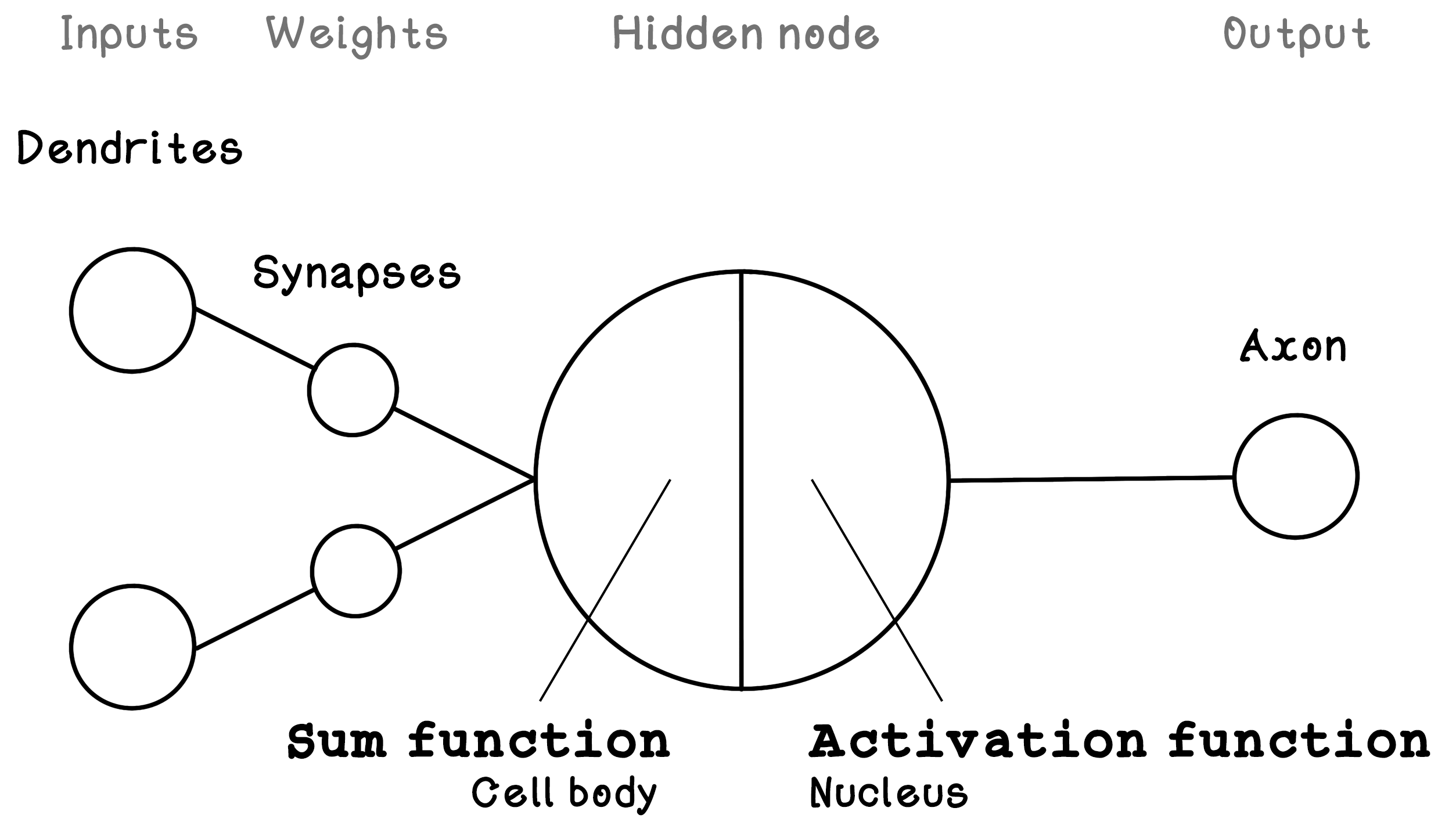

The neuron is the fundamental concept that makes up the brain. As mentioned earlier, it accepts many inputs from other neurons, processes those inputs, and transfers the result to other connected neurons. ANNs are based on the fundamental concept of the Perceptron — a logical representation of a single biological neuron. Like neurons, the Perceptron receives inputs (like dendrites), alters these inputs by using weights (like synapses), processes the weighted inputs (like the cell body and nucleus), and outputs a result (like axons). The Perceptron is loosely based on a neuron. You will notice that the synapses are depicted after the dendrites, representing the influence of synapses on incoming inputs.

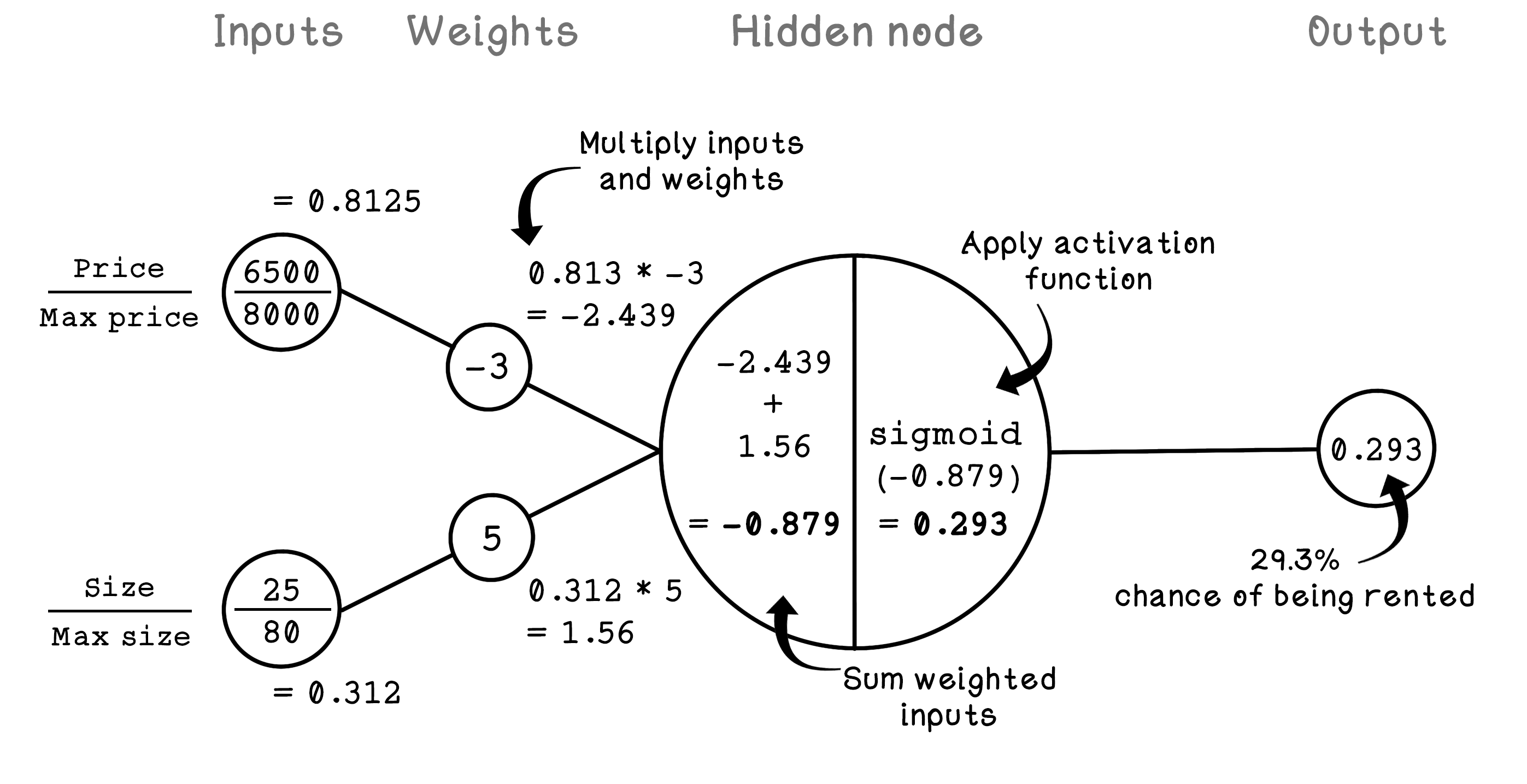

The components of the Perceptron are described by variables that are useful for calculating the output. Weights modify the inputs; that value is processed by a hidden node; and finally, the result is provided as the output. Here is a brief description of the components of the Perceptron:

- Inputs — Describe the input values. In a neuron, these values would be an input signal.

- Weights — Describe the weights on each connection between an input and the hidden node. Weights influence the intensity of an input and result in a weighted input. In a neuron, these connections would be the synapses.

- Hidden node (sum and activation) — Sums the weighted input values and then applies an activation function to the summed result. An activation function determines the activation/output of the hidden node/neuron.

- Output — Describes the final output of the Perceptron.

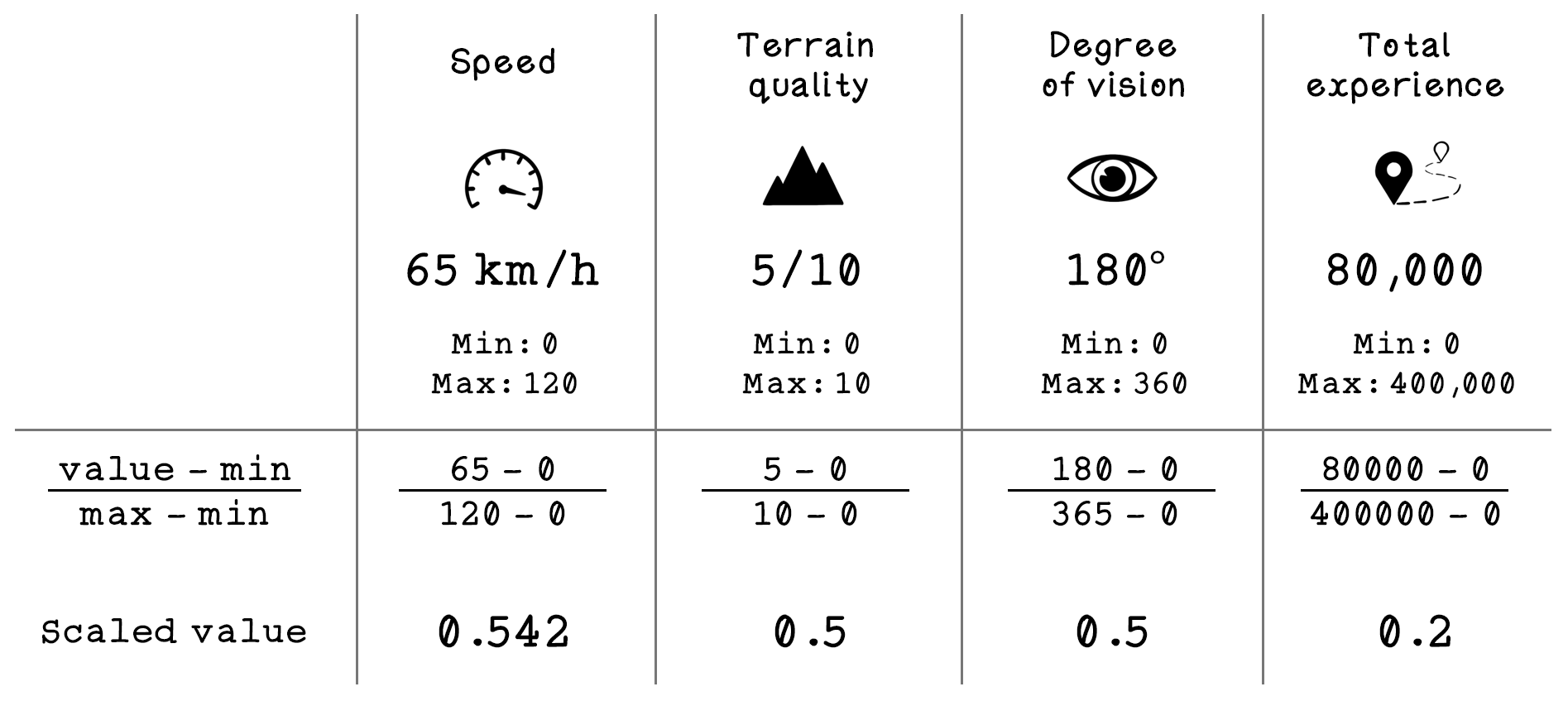

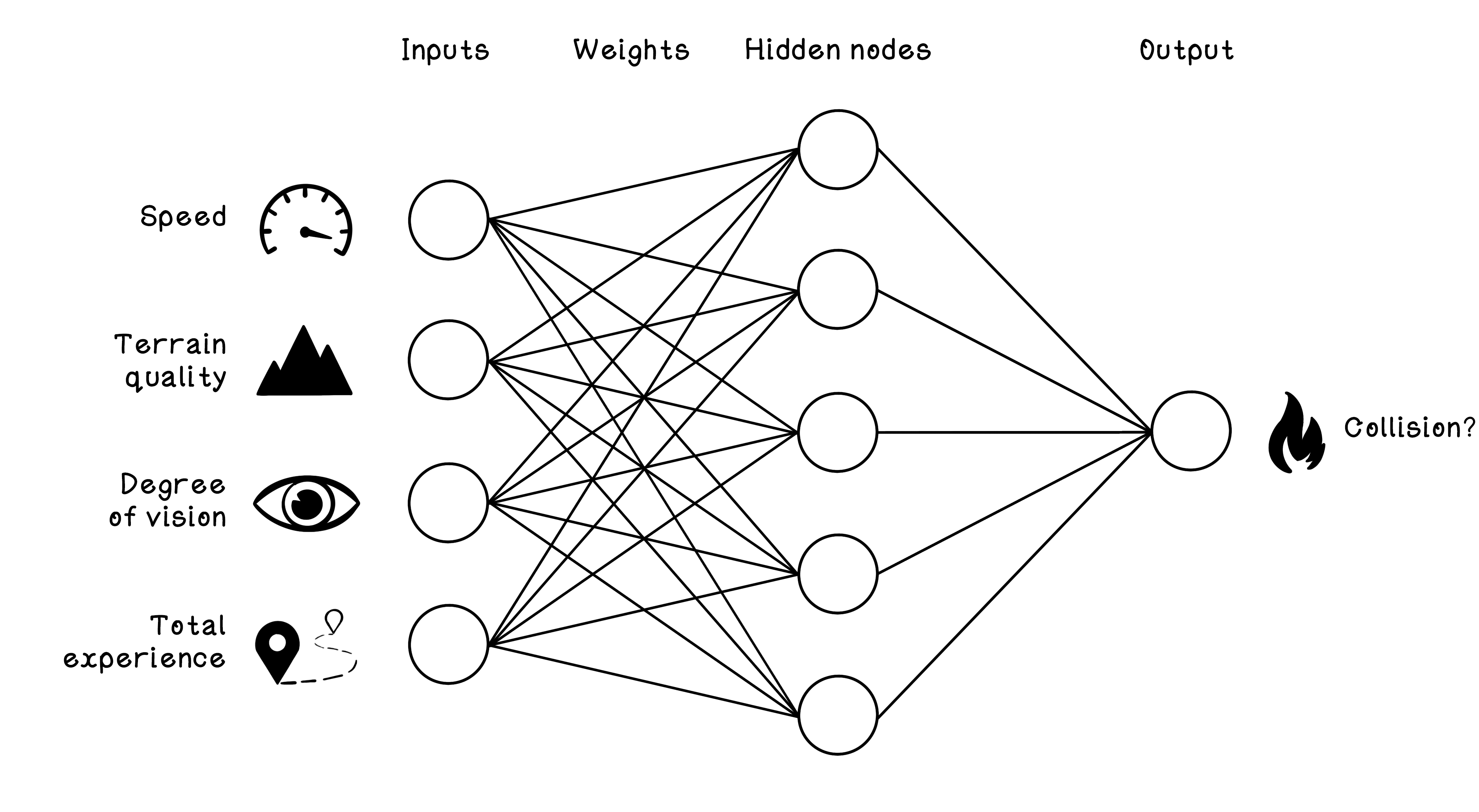

The Perceptron is useful for solving simple problems, but as the dimensions of the data increase, it becomes less feasible. ANNs use the principles of the Perceptron and apply them to many hidden nodes as opposed to a single one. To explore the workings of multi-node ANNs, consider an example dataset related to car collisions. Suppose that we have data from several cars at the moment that an unforeseen object enters the path of their movement. The dataset contains features related to the conditions and whether a collision occurred, including the following:

- Speed — The speed at which the car was traveling before encountering the object

- Terrain quality — The quality of the road on which the car was traveling before encountering the object

- Degree of vision — The driver’s degree of vision before the car encountered the object

- Total experience — The total driving experience of the driver of the car

- Collision occurred? — Whether a collision occurred or not

| SPEED | TERRAIN | VISION | EXPERIENCE | LABEL |

|---|---|---|---|---|

| 75 mph | 2/10 | 148° | 180,626 mi | Accident |

| 93 mph | 2/10 | 69° | 288,001 mi | Accident |

| 126 mph | 10/10 | 166° | 274,291 mi | Accident |

| 8 mph | 7/10 | 212° | 184,746 mi | No accident |

| 62 mph | 6/10 | 110° | 330,085 mi | No accident |

| 116 mph | 10/10 | 50° | 179,895 mi | Accident |

An example ANN architecture can be used to classify whether a collision will occur based on the features we have. The features in the dataset must be mapped as inputs to the ANN, and the class that we are trying to predict is mapped as the output of the ANN. In this example, the input nodes are speed, terrain quality, degree of vision, and total experience; the output node is whether a collision happened.

The below simulation shows how the ANN can be used to classify whether a collision will occur based on the features we have. Start the model, and once it’s trained enough, play with the scenarios to see how your guesses fair against the model.

Training loop

Binary classifier trained on simulated driving data.

New driving scenario

Decide if an accident will happen.

| Speed | — |

|---|---|

| Terrain quality | — |

| Vision | — |

| Total experience | — |