Reinforcement learning

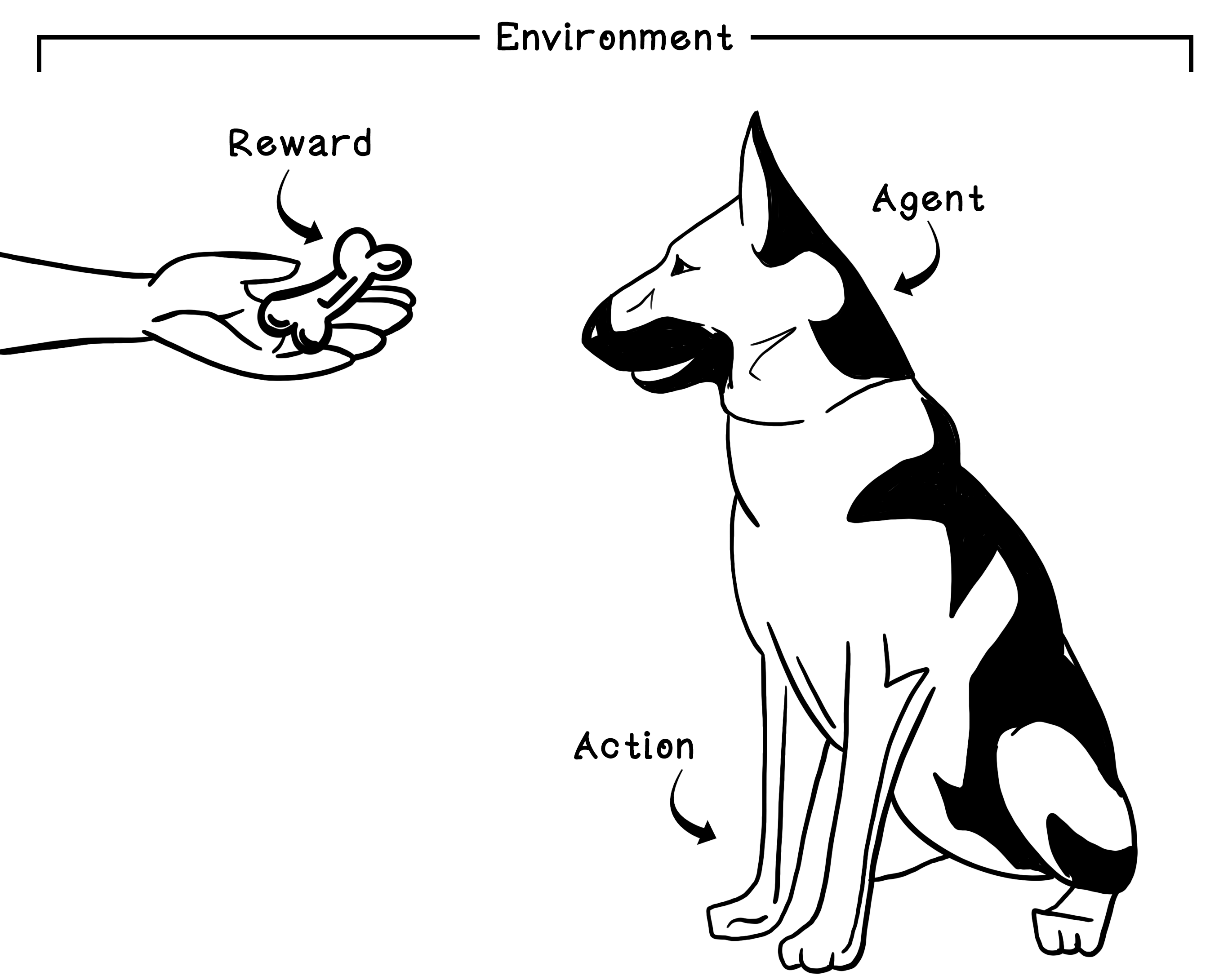

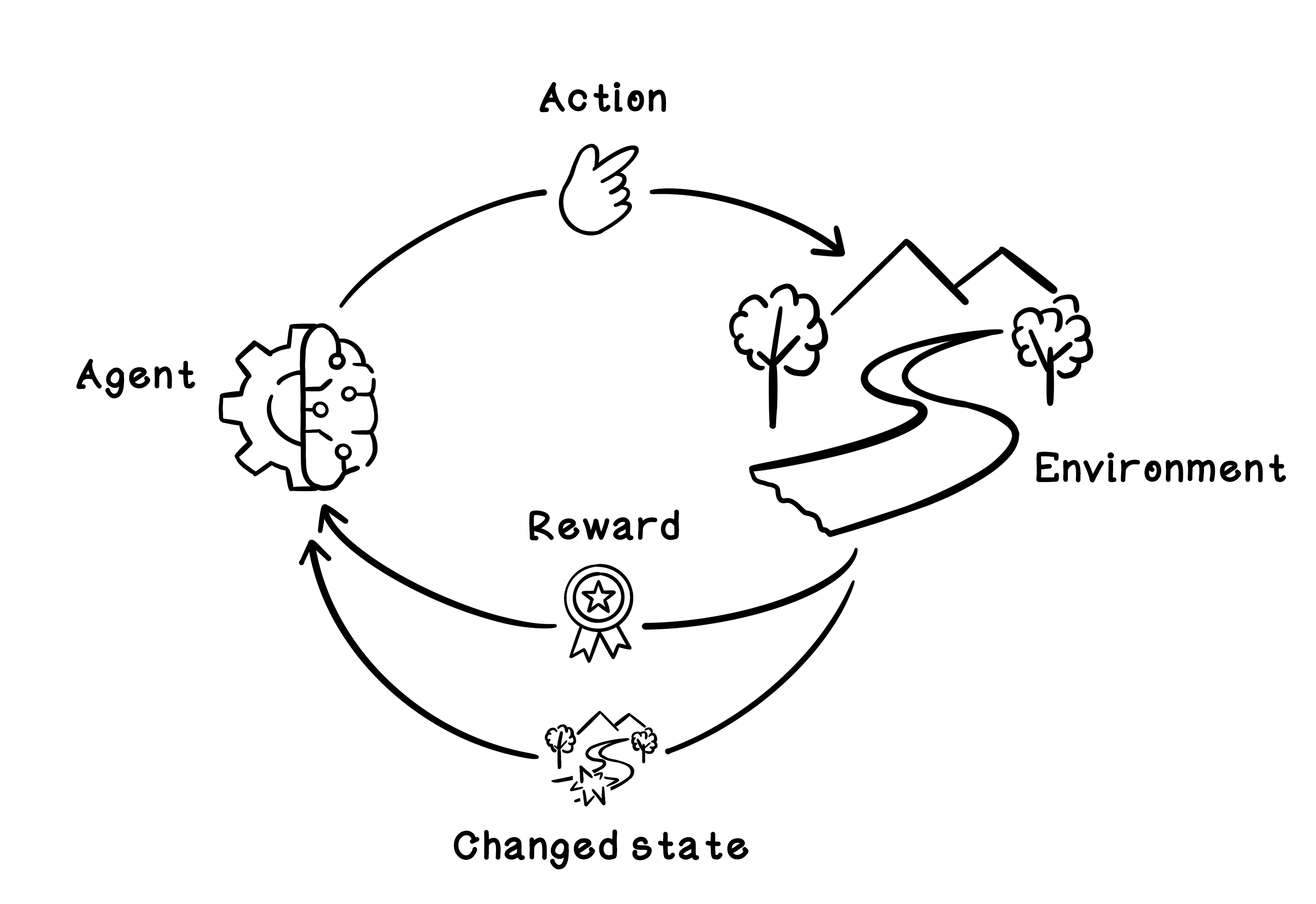

Reinforcement learning (RL) is an area of machine learning inspired by behavioral psychology. The concept of reinforcement learning is based on cumulative rewards or penalties for the actions that are taken by an agent in a dynamic environment. Think about a young dog growing up. The dog is the agent in an environment that is our home. When we want the dog to sit, we might simply say, “Sit.” The dog doesn’t understand English, so we might nudge it by lightly pushing down on its back. After the dog sits, we pet it or give it a treat - this is a welcomed reward. We need to repeat this many times, but after some time, we have positively reinforced the idea of sitting for the dog. The trigger in the environment is saying “Sit”; the behavior learned is sitting; and the reward is pets or treats.

Like other machine learning algorithms, a reinforcement learning model needs to be trained before it can be used. The training phase centers on exploring the environment and receiving feedback, given specific actions performed in specific circumstances or states. The life cycle of training a reinforcement learning model is based on the Markov Decision Process, which provides a mathematical framework for modeling decisions. By quantifying decisions made and their outcomes, we can train a model to learn what actions toward a goal are most favorable.

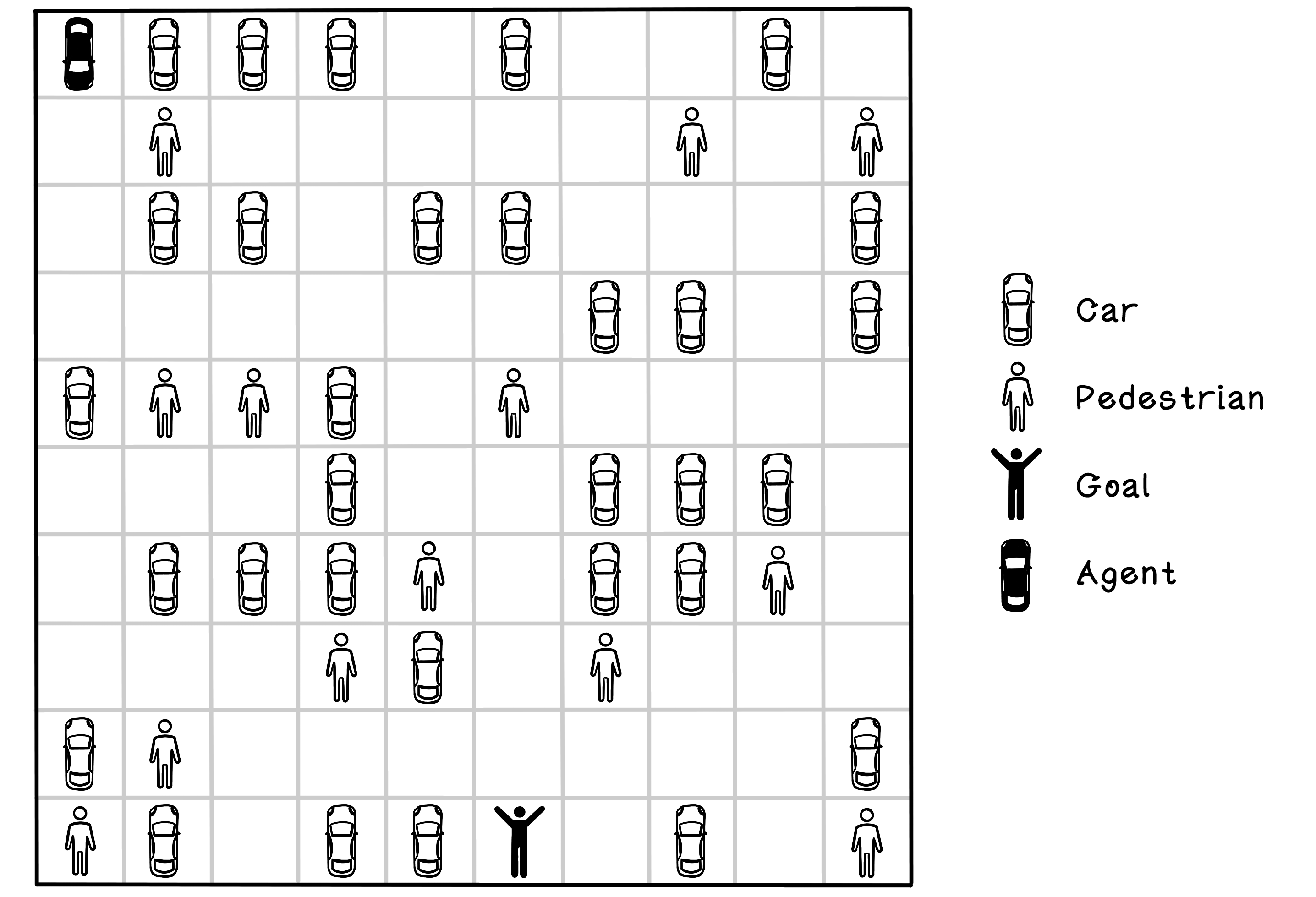

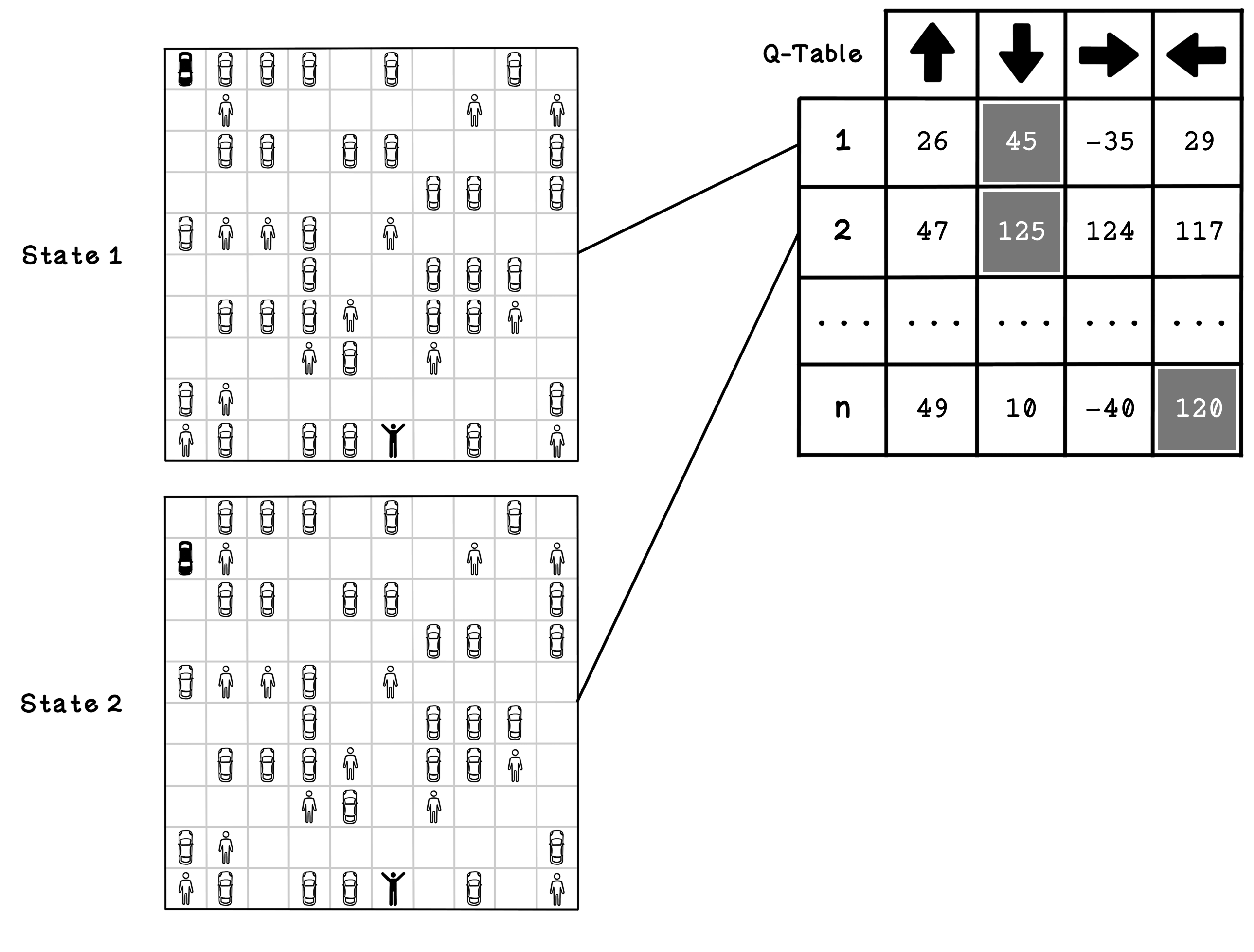

Imagine a parking-lot scenario containing several other cars and pedestrians. The starting position of the self-driving car and the location of its owner are represented as black figures. In this example, the self-driving car that applies actions to the environment is known as the agent. The self-driving car, or agent, can take several actions in the environment. In this simple example, the actions are moving north, south, east, and west. Choosing an action results in the agent moving one block in that direction. The agent can’t move diagonally.

The simulator needs to model the environment, the actions of the agent, and the rewards received after each action. A reinforcement learning algorithm will use the simulator to learn through practice by taking actions in the simulated environment and measuring the outcome. The simulator should provide the following functionality and information at minimum:

- Initialize the environment — This function involves resetting the environment, including the agent, to the starting state. Get the current state of the environment—This function should provide the current state of the environment, which will change after each action is performed.

- Apply an action to the environment—This function involves having the agent apply an action to the environment. The environment is affected by the action, which may result in a reward.

- Calculate the reward of the action—This function is related to applying the action to the environment. The reward for the action and effect on the environment need to be calculated.

- Determine whether the goal is achieved—This function determines whether the agent has achieved the goal. The goal can also sometimes be represented as completed. In an environment in which the goal cannot be achieved, the simulator needs to signal completion when it deems necessary.

Reinforcement Learning with Q-Learning

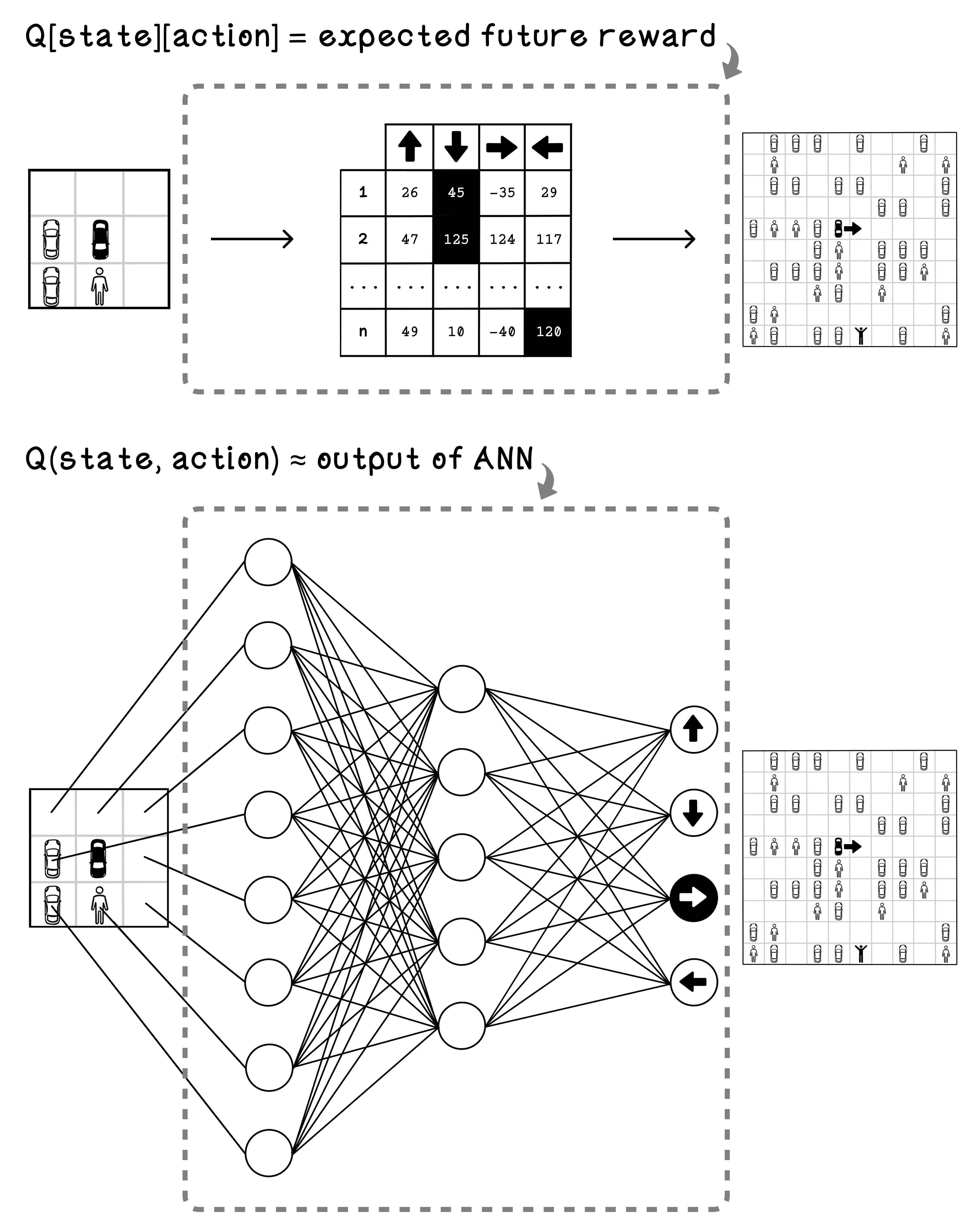

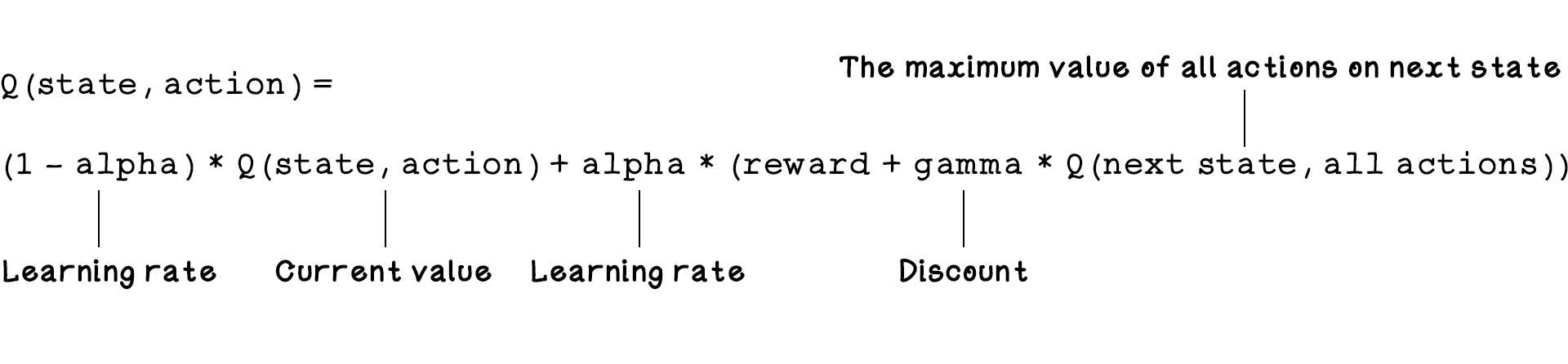

Q-learning is an approach in reinforcement learning that uses the states and actions in an environment to model a table that contains information describing favorable actions based on specific states. Q-learning is a model-free approach (meaning it doesn’t need to know the physics of the world beforehand). It learns to estimate the quality of taking actions in specific states.

Reinforcement learning with Q-learning employs a reward table called a Q-table. Think of a Q-table as a scorecard. The rows are the possible states, and the columns are the possible actions. Each cell contains a Q-Value (Quality Value). The table doesn’t explicitly store “the best action”; rather, it stores a score for every possible action. To find the best action, the agent looks at the row for its current state and picks the action with the highest Q-Value.

The point of a Q-table is to describe which actions are most favorable for the agent as it seeks a goal. The values that represent favorable actions are learned through simulating the possible actions in the environment and learning from the outcome and change in state.

It’s worth noting that the agent has a chance of choosing a random action or an action from the Q-table, as shown later in figure 10 13. The Q represents the function that provides the reward, or quality, of an action in an environment. Figure 10.11 depicts a trained Q-table and two possible states that may be represented by the action values for each state. These states are relevant to the problem we’re solving; another problem might allow the agent to move diagonally as well. Note that the number of states differs based on the environment and that new states can be added as they are discovered. In state 1, the agent is in the top-left corner, and in state 2, the agent is in the position below its previous state. The Q-table encodes the estimated value of taking an action in a state. Once the table is fully trained (converged), the action with the largest number is considered the most beneficial. Note that at the start of training, these numbers are just random guesses; they only become accurate “best actions” after many trials. Soon, we will see how they’re calculated.

Play with the exploration, learning rate, and discount rate to see how they affect the agent’s behavior in the parking lot simultion with Q-learning below.