Large Language Models (LLMs)

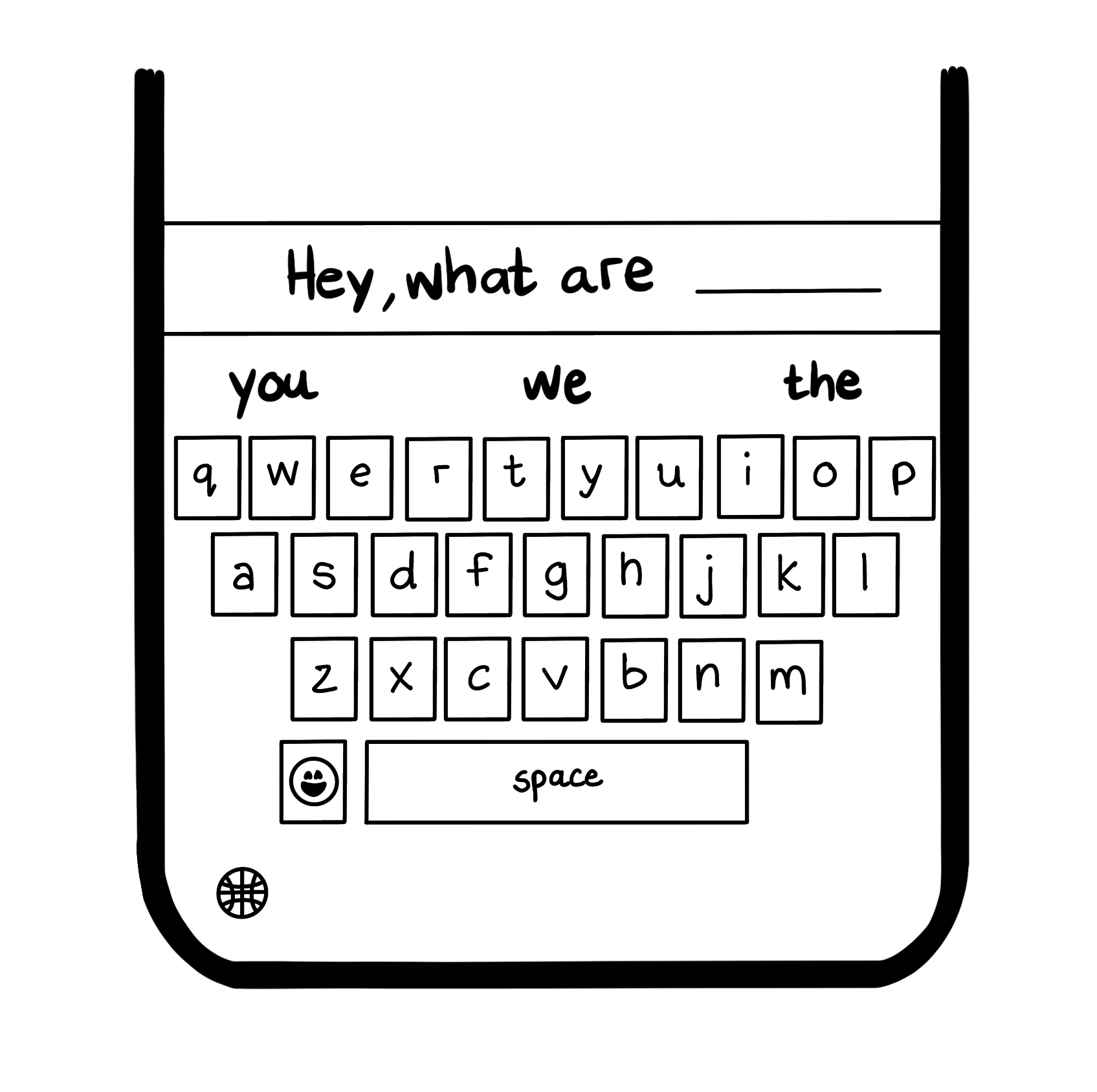

Large language models (LLMs) are machine learning models that are specialized for natural language processing problems, like language generation. Consider the autocomplete feature on your mobile device’s keyboard (figure 11.1). When you start typing “Hey, what are…”, the keyboard likely predicts that the next word is “you”, “we”, or “the”, because these are the most common next words after the phrase. It makes this choice by scanning a table of probabilities that was trained on commonly available pieces of content - this simple table is a language model.

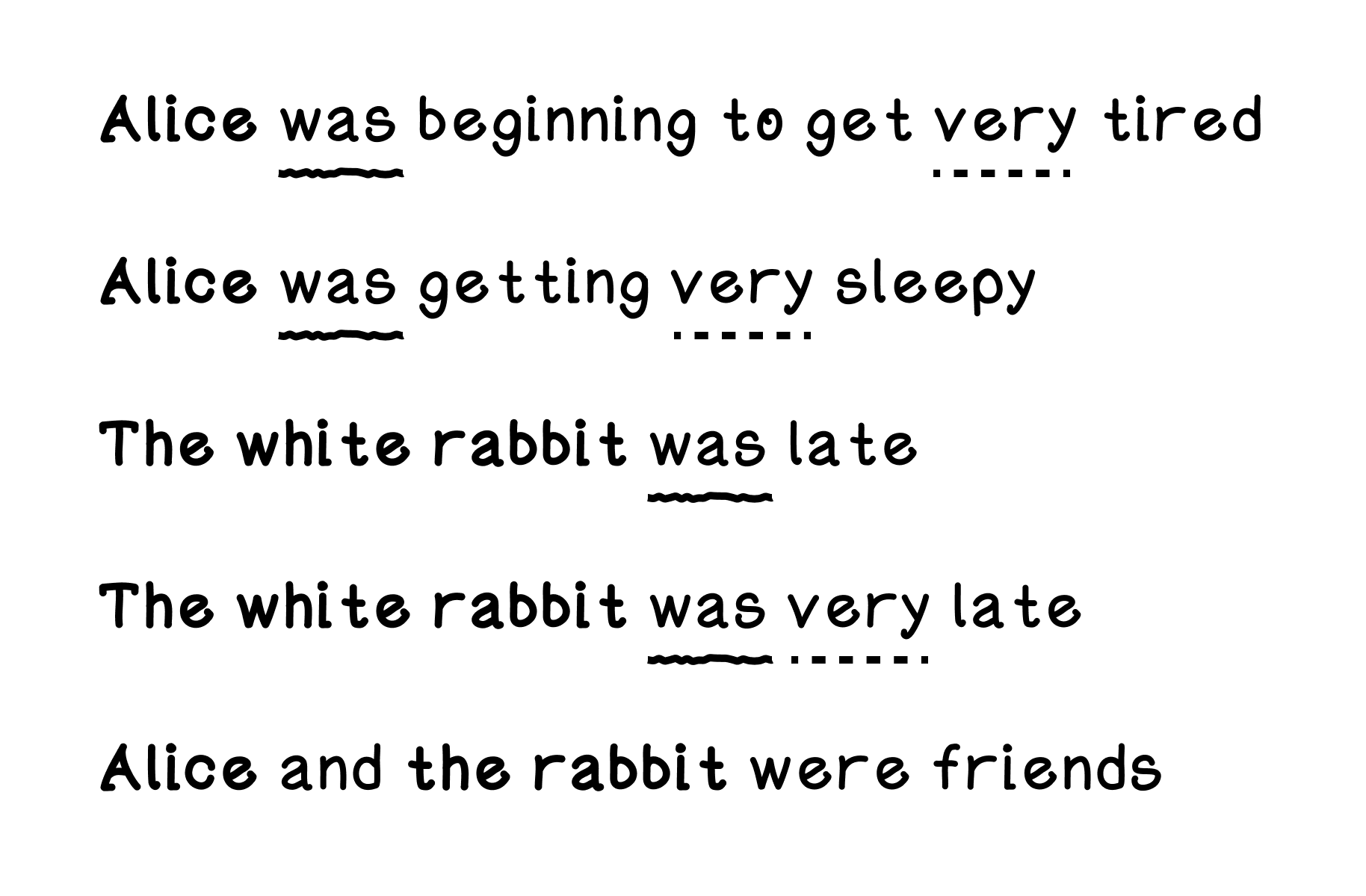

Suppose we have the following short sentences from the book Alice’s Adventures in Wonderland.

- Alice was beginning to get very tired

- Alice was getting very sleepy

- The white rabbit was late

- The white rabbit was very late

- Alice and the rabbit were friends

From simply reading these sentences, you might already recognize some patterns, like “Alice” and “The white rabbit” are always the nouns, “was” normally comes after “Alice” and after “The white rabbit”, the adjective “very” is used a lot (figure 11.3). It’s normal for us because our brains are pattern matching machines.

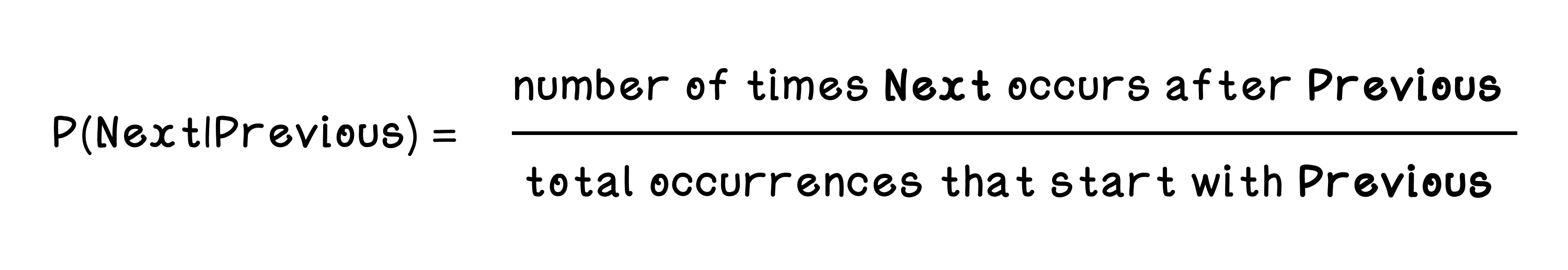

A simple concept in language models is Bigrams. Bigrams are 2-word “sentences”, for example “Alice was” or “white rabbit”. If we consider the first word as the word we’re making a prediction about, and the next word as a possible prediction, we can build a table of probabilities based on the sentences that we have available to train on. Probabilities are calculated using the formula in figure 11.4. The count for a specific word is the total occurrences of a word (Next) after the word (Previous ) divided by the sum of all occurrences of the word (Previous). This Next and Previous pairing is a Bigram. Think of this like your phone’s autocomplete. If you type “Alice”, the model looks at its training data to see what word usually comes next. If “was” appears 90 times after “Alice”, and “ran” only appears 10 times, the model assigns a 90% probability to “was” and suggests it as the next word.

//table

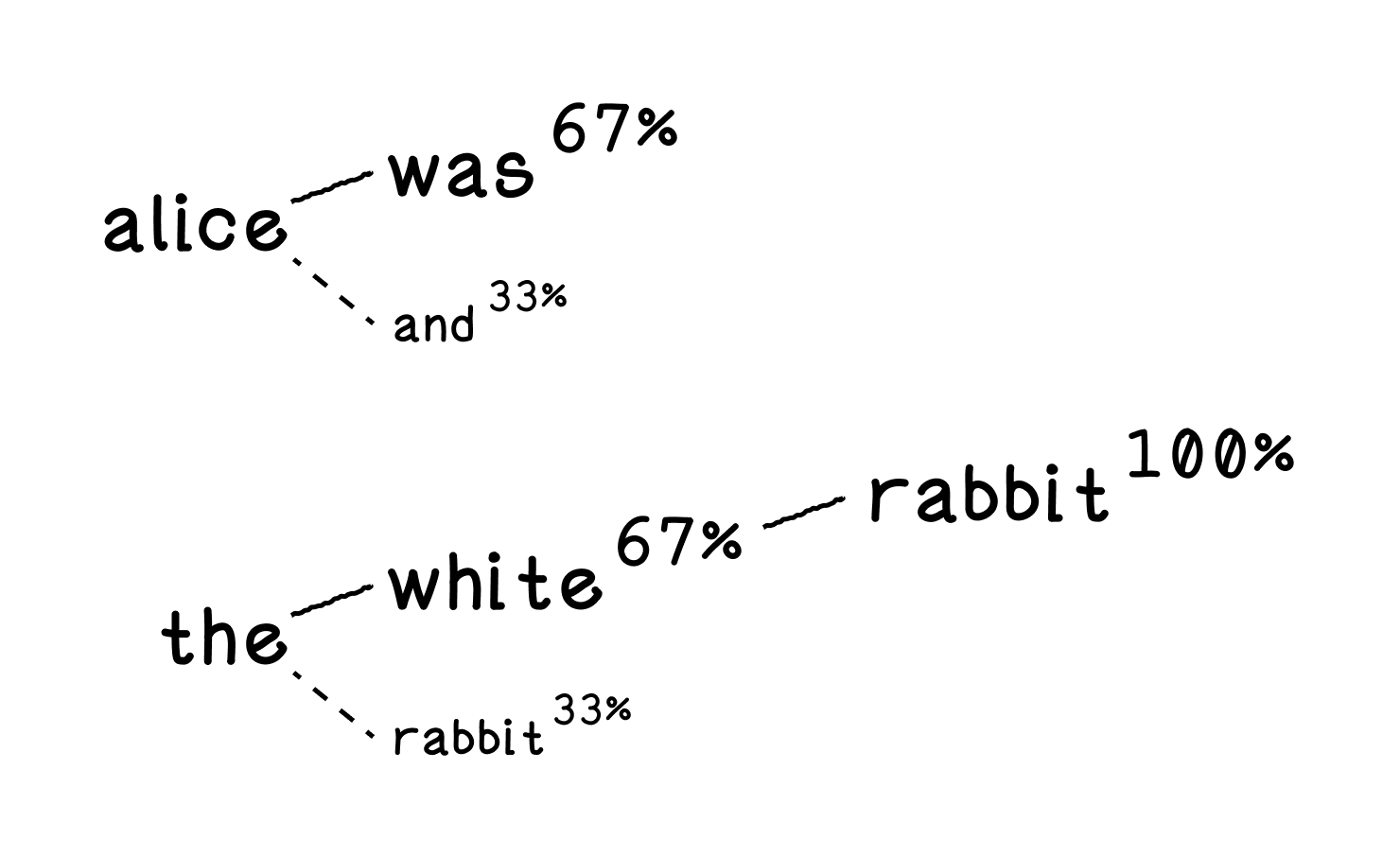

Now, using this table of probabilities, we can make predictions. Given the word “alice”, the model has two options for the next word: “was” with a probability of 0.67, and “and” with a probability of 0.33. This means that the next word predicted will be “was” with the probability of 67%, so the result is “alice was” (figure 11.5). Given the word “the”, the options are “white” with a probability of 0 67 and “rabbit” with a probability of 0.33. So, the completion will be “the white” with the probability of 67%, then for the next word, “white” has only one option with a probability of 100%, “rabbit”. Progressing to the sentence “the white rabbit”.

You can see how this tiny example of just 5 sentences and 29 “tokens”, can start producing a semblance of intelligent behavior that’s expected from a language model.

Play with the toy language model below to see how it learns the most likely next word based on the previous word.

Test a tiny language model

Bigram table

| Word | Next Word | Occurrence Count | Probability |

|---|