Generative Image Models

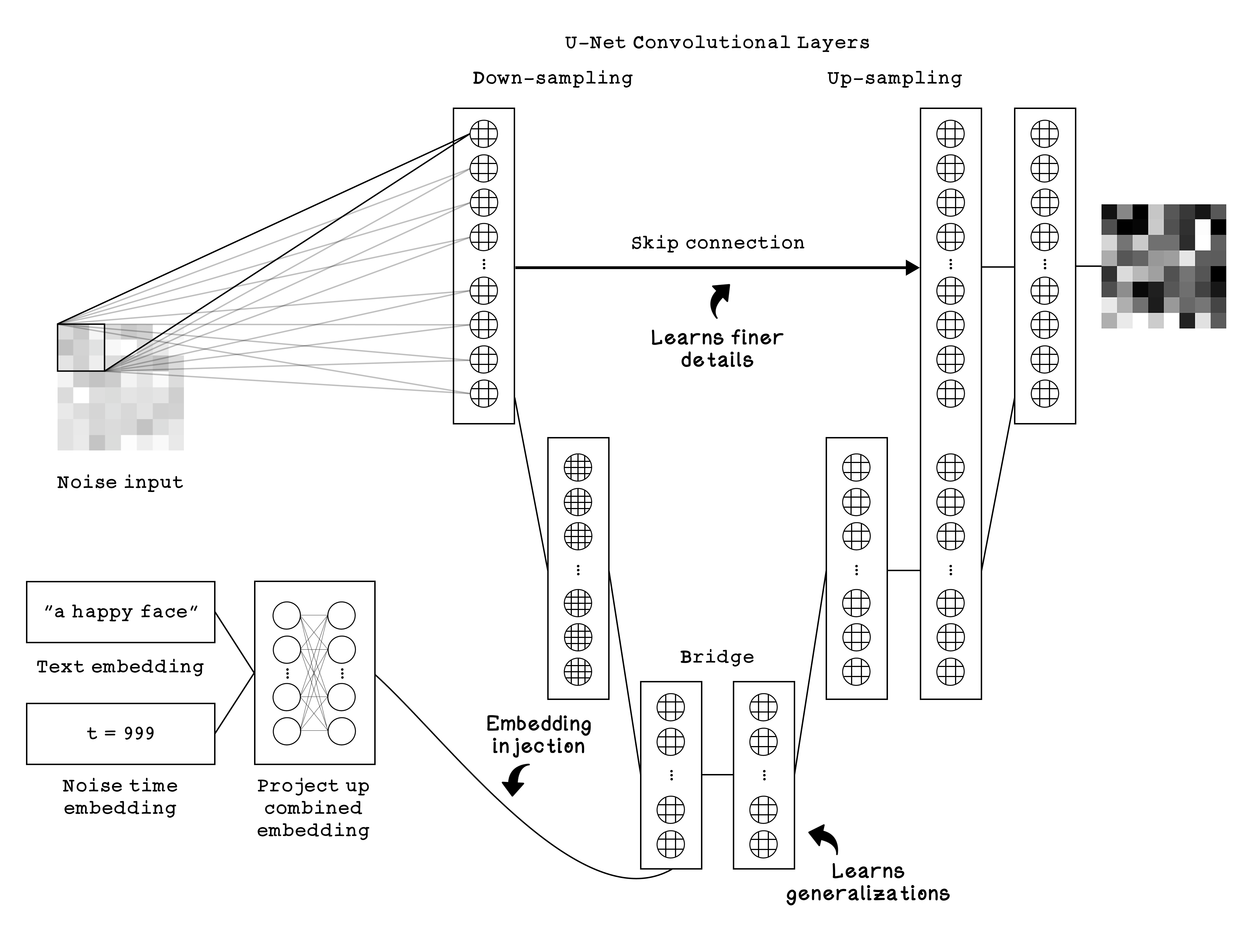

Generative image models are a family of algorithms and artificial neural network structures that are specialized towards generating accurate images based on human language input. Imagine a sculptor who has spent his life observing people who are in deep thought. For years, he walks around the town and thoroughly studies every aspect of every person that he sees in thinking deeply - carefully studying their posture, expressions, and subtle details. Over time, he internalizes what it means to look like someone thinking. He might have seen hundreds of thousands of people pass through the town over the years. We blindfold the sculptor and give him a random block of marble, and ask “Make me a sculpture of a person thinking”. The sculptor can’t add new material to marble; instead, he feels the block of marble and chips away a small piece that he’s confident does not look like a person thinking. He repeats this thousands of times, each time feeling the edges of the marble, and chipping away a little more “noise”. Slowly and methodically, a coherent image of a person thinking emerges from within the random block of stone.

In the same way, a generative image model, trained on millions of images, begins with random noise and iteratively transforms it into a clear image. Not by adding to a blank canvas, but by learning how to remove what doesn’t belong.

The most popular architectures and approaches for modern image generation is Diffusion.

Let’s assume that we want to generate an image of a tree, and we start with the random unclear image.

What do you see? This is the starting point for the model: a canvas of pure, random noise. To our eyes, it’s a meaningless, blurry blob. To the model, it is a field of potential, containing every possible image in a faint, ghostly form. At this stage, the model’s job is to take its first look and decide which parts of this noise are the least likely to belong in making the image look like a tree.

After several steps of “denoising”, a faint structure begins to emerge (figure 12.4). What do you see now? Perhaps a head of broccoli? An explosion? A tree? It’s still very ambiguous, but a general shape is taking form. The model has peeled away the most obvious layers of noise, revealing a low-resolution silhouette. It’s beginning to commit to a general structure, but the details are still fluid. Can you identify which parts of the image need to be denoised to see the tree better?

After many more refinement steps, the image becomes much clearer (figure 12.5). It’s almost certainly a tree! The main trunk and the leafy canopy are well-defined. The model has now locked-in the high-level concept. Its task is no longer about figuring out the overall structure, but rather, about refining the details, carving out the smaller branches, and adding texture to the leaves. Can you identify the areas that need to be focused on to reveal more details about the tree?

Finally, after hundreds of steps, we arrive at the final, crisp image (figure 12.6). We can clearly see the tree, complete with detailed bark, individual leaf clusters, and a coherent structure. The model has successfully removed all the noise that was inconsistent with its goal. This reveals that the tree was hidden within the initial random noise. The journey from a noisy blob to a sharp final image is the essence of the diffusion process. This concept likely seems counterintuitive. When we think of image generation, we might think that it’s about drawing and painting because this is how humans create images, but it’s the inverse: starting with noise and iteratively removing the noise that doesn’t shouldn’t be there.

Play with the learning rate and noise (beta) parameters to see how they affect the image generation process.